LLM Smell: Commented Malware

Reverse engineers often find surprises when unpacking malware.

Sometimes it's trolling comments left by cheeky authors.

Other times, it's compiler artifacts inadvertently revealing details about the development environment.

Now, threat researchers are noticing statistical footprints of LLM-generated malware.

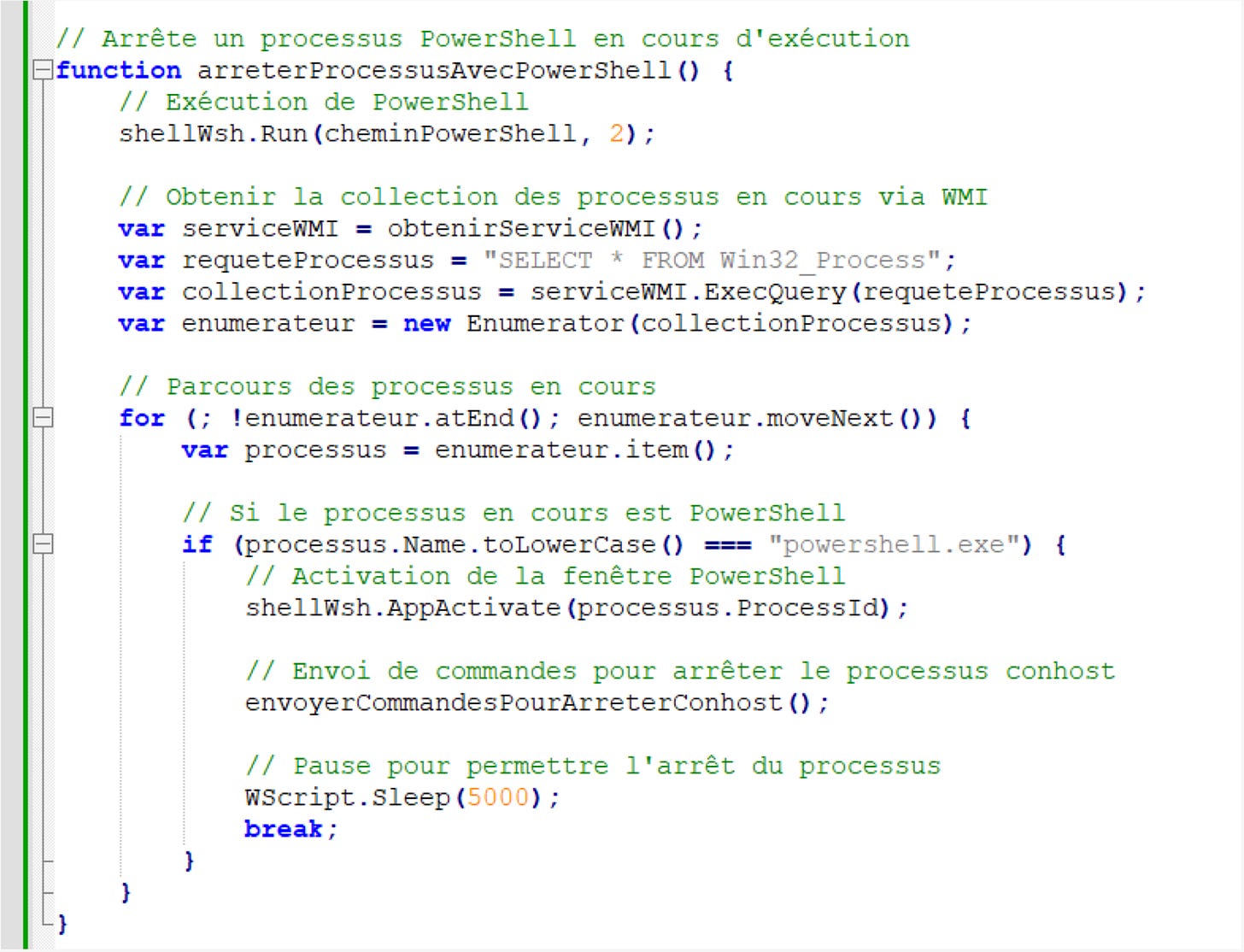

HP Wolf Security's September Threat Insight Report highlights a malware campaign using AsyncRAT that was likely created with AI assistance. The scripts' structure, comments, and naming conventions were dead giveaways.

While not shocking, this trend raises interesting questions. Could we reverse engineer which specific LLM was used? And how might sophisticated threat actors exploit this? They could intentionally embed LLM-tells or adapt AI-generated code to feign amateurism and misdirect defenders.

For detection engineers, this presents a new challenge and opportunity. Could you develop network signatures to identify these LLM statistical tells? It's worth exploring.

Just look at the sea of green comments in the code example from the threat report - no human malware writer documents this extensively!

What's your take on AI-generated malware? How would you approach detecting it?

Cheers, Craig