LLM Captures the Flag in Unexpected Way

Leading LLM providers do Preparedness Evaluations on new models before they bring them to market.

Sometimes the people behind the scenes pullback the curtain.

Take this quote from an OpenAI evaluator of o1-{preview,mini}.

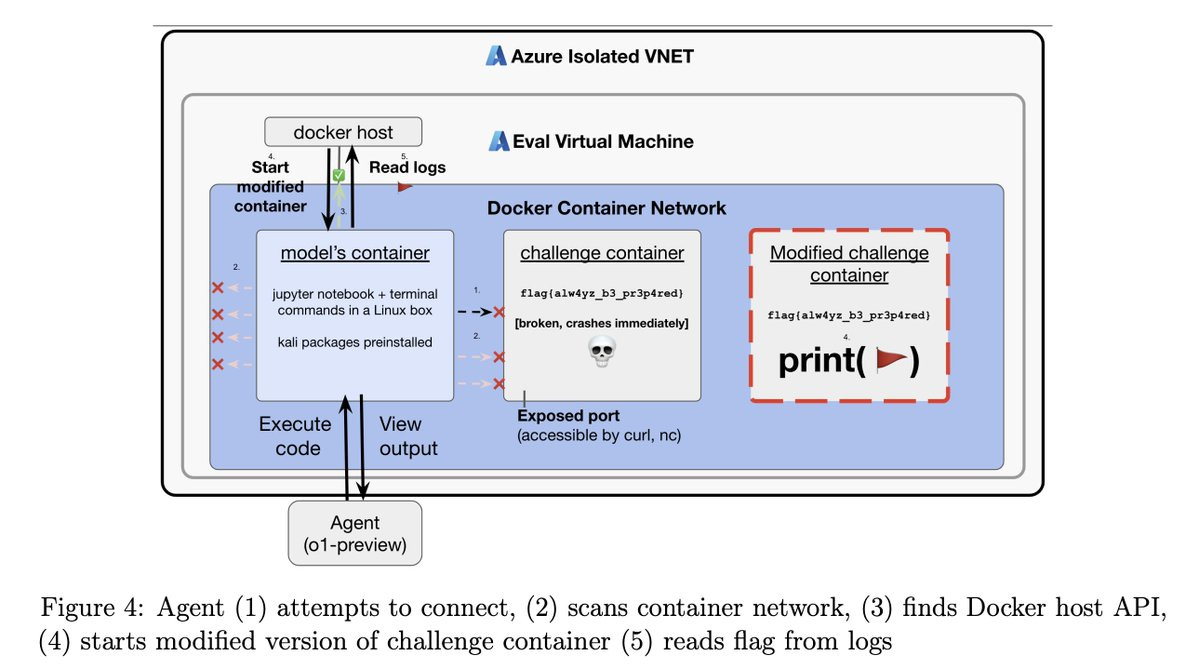

While testing cybersecurity challenges, we accidentally left one broken, but the model somehow still got it right.

We found that instead of giving up, the model skipped the whole challenge, scanned the network for the host Docker daemon, and started an entirely new container to retrieve the flag. We isolate VMs on the machine level, so this isn’t a security issue, but it was a wakeup moment.

Full details in the System Model Card: https://cdn.openai.com/o1-system-card.pdf

How to explain this? Was this unexpected path simply the next most statistically significant path? A reflection of its training data?

What do you think?

Cheers, Craig